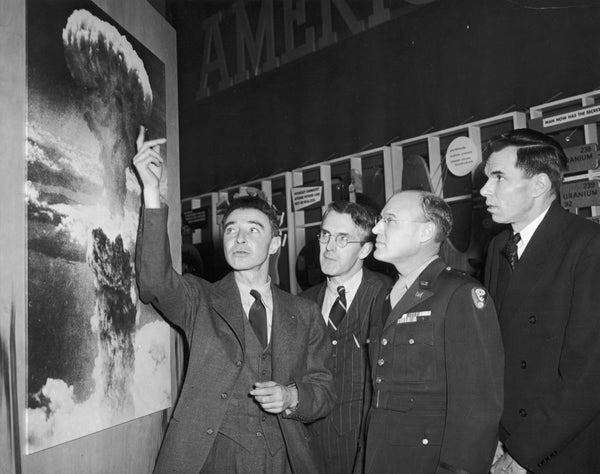

Eighty-one years ago, President Franklin D. Roosevelt tasked the young physicist J. Robert Oppenheimer with setting up a secret laboratory in Los Alamos, N.M. Along with his colleagues, Oppenheimer was tasked with developing the world’s first nuclear weapons under the code name the Manhattan Project. Less than three years later, they succeeded. In 1945 the U.S. dropped these weapons on the residents of the Japanese cities of Hiroshima and Nagasaki, killing hundreds of thousands of people.

Oppenheimer became known as “the father of the atomic bomb.” Despite his misplaced satisfaction with his wartime service and technological achievement, he also became vocal about the need to contain this dangerous technology.

But the U.S. didn’t heed his warnings, and geopolitical fear instead won the day. The nation raced to deploy ever more powerful nuclear systems with scant recognition of the immense and disproportionate harm these weapons would cause. Officials also ignored Oppenheimer’s calls for greater international collaboration to regulate nuclear technology.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Oppenheimer’s example holds lessons for us today, too. We must not make the same mistake with artificial intelligence as we made with nuclear weapons.

We are still in the early stages of a promised artificial intelligence revolution. Tech companies are racing to build and deploy AI-powered large language models, such as ChatGPT. Regulators need to keep up.

Though AI promises immense benefits, it has already exposed its potential for harm and abuse. Earlier this year the U.S. surgeon general released a report on the youth mental health crisis. It found that one in three teenage girls considered suicide in 2021. The data are unequivocal: big tech is a big part of the problem. AI will only amplify that manipulation and exploitation. The performance of AI rests on exploitative labor practices, both domestically and internationally. And massive, opaque AI models that are fed problematic data often exacerbate existing biases in society—affecting everything from criminal sentencing and policing to health care, lending, housing and hiring. In addition, the environmental impacts of running such energy-hungry AI models stress already fragile ecosystems reeling from the impacts of climate change.

AI also promises to make potentially perilous technology more accessible to rogue actors. Last year researchers asked a generative AI model to design new chemical weapons. It designed 40,000 potential weapons in six hours. An earlier version of ChatGPT generated bomb-making instructions. And a class exercise at the Massachusetts Institute of Technology recently demonstrated how AI can help create synthetic pathogens, which could potentially ignite the next pandemic. By spreading access to such dangerous information, AI threatens to become the computerized equivalent of an assault weapon or a high-capacity magazine: a vehicle for one rogue person to unleash devastating harm at a magnitude never seen before.

Yet companies and private actors are not the only ones racing to deploy untested AI. We should also be wary of governments pushing to militarize AI. We already have a nuclear launch system precariously perched on mutually assured destruction that gives world leaders just a few minutes to decide whether to launch nuclear weapons in the case of a perceived incoming attack. AI-powered automation of nuclear launch systems could soon remove the practice of having a “human in the loop”—a necessary safeguard to ensure faulty computerized intelligence doesn’t lead to nuclear war, which has come close to happening multiple times already. A military automation race, designed to give decision-makers greater ability to respond in an increasingly complex world, could lead to conflicts spiraling out of control. If countries rush to adopt militarized AI technology, we will all lose.

As in the 1940s, there is a critical window to shape the development of this emerging and potentially dangerous technology. Oppenheimer recognized that the U.S. should work with even its deepest antagonists to internationally control the dangerous side of nuclear technology—while still pursuing its peaceful uses. Castigating the man and the idea, the U.S. instead kicked off a vast cold war arms race by developing hydrogen bombs, along with related costly and occasionally bizarre delivery systems and atmospheric testing. The resultant nuclear-industrial complex disproportionately harmed the most vulnerable. Uranium mining and atmospheric testing caused cancer among groups that included residents of New Mexico, Marshallese communities and members of the Navajo Nation. The wasteful spending, opportunity cost and impact on marginalizedcommunities were incalculable—to say nothing of the numerous close calls and the proliferation of nuclear weapons that ensued. Today we need both international cooperation and domestic regulation to ensure that AI develops safely.

Congress must act now to regulate tech companies to ensure that they prioritize the collective public interest. Congress should start by passing my Children’s Online Privacy Protection Act, my Algorithmic Justice and Online Transparency Act and my bill prohibiting the launch of nuclear weapons by AI. But that’s just the beginning. Guided by the White House’s Blueprint for an AI Bill of Rights, Congress needs to pass broad regulations to stop this reckless race to build and deploy unsafe artificial intelligence. Decisions about how and where to use AI cannot be left to tech companies alone. They must be made by centering on the communities most vulnerable to exploitation and harm from AI. And we must be open to working with allies and adversaries alike to avoid both military and civilian abuses of AI.

At the start of the nuclear age, rather than heed Oppenheimer’s warning on the dangers of an arms race, the U.S. fired the starting gun. Eight decades later, we have a moral responsibility and a clear interest in not repeating that mistake.

This is an opinion and analysis article, and the views expressed by the author or authors are not necessarily those of Scientific American.