If you’ve ever asked a chatbot a question and received nonsensical gibberish in reply, you already know that “artificial intelligence” isn’t always very intelligent.

And sometimes it isn’t all that artificial either. That’s one of the lessons from Amazon’s recent decision to dial back its much-ballyhooed “Just Walk Out” shopping technology, a seemingly science-fiction-esque software that actually functioned, in no small part, thanks to behind-the-scenes human labor.

This phenomenon is nicknamed “fauxtomation” because it “hides the human work and also falsely inflates the value of the ‘automated’ solution,” says Irina Raicu, director of the Internet Ethics program at Santa Clara University’s Markkula Center for Applied Ethics.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Take Just Walk Out: It promises a seamless retail experience in which customers at Amazon Fresh groceries or third-party stores can grab items from the shelf, get billed automatically and leave without ever needing to check out. But Amazon at one point had more than 1,000 workers in India who trained the Just Walk Out AI model—and manually reviewed some of its sales—according to an article published last year on the Information, a technology business website.

An anonymous source who’d worked on the Just Walk Out technology told the outlet that as many as 700 human reviews were needed for every 1,000 customer transactions. Amazon has disputed the Information’s characterization of its process. A company representative told Scientific American that while Amazon “can’t disclose numbers,” Just Walk Out has “far fewer” workers annotating shopping data than has been reported. In an April 17 blog post, Dilip Kumar, vice president of Amazon Web Services applications, wrote that “this is no different than any other AI system that places a high value on accuracy, where human reviewers are common.”

News of this technology’s retirement in U.S. Amazon Fresh stores—in favor of shopping carts that let customers scan items as they shop—has triggered renewed focus on an uncomfortable truth about Silicon Valley hype. Technologies heralded as automating away dull or dangerous work may still need humans in the loop, or as one Bloomberg columnist put it, AI software “often requires armies of human babysitters.”

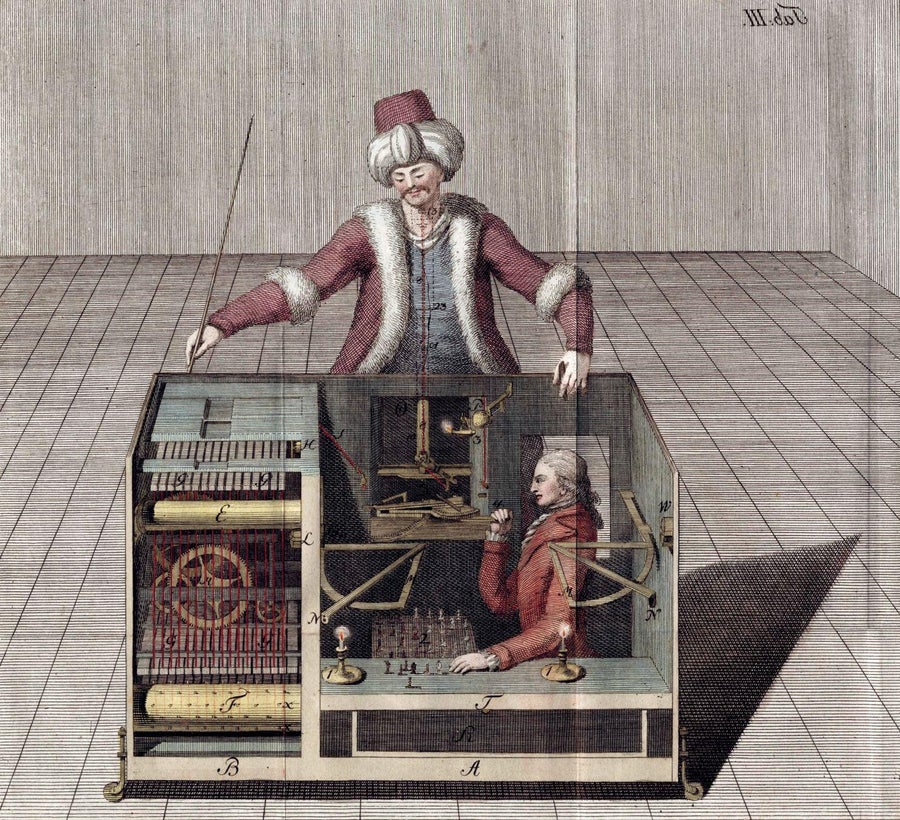

It’s hardly a new phenomenon. Throughout history, canny inventors and entrepreneurs have sought to slap the “automated” label on what was really just normal human activity. Take the “Mechanical Turk,” a robe-clad robot that inventor Wolfgang von Kempelen debuted in the early 1770s. Von Kempelen would tell observers that the humanoid machine could play full games of chess, opening up the automaton to show off clockwork mechanisms within, as Atlas Obscura recounted.

But the Mechanical Turk was a sham. As many contemporary observers began to suspect, a human operator was hiding in a chamber beneath the chessboard and controlling its movements by candlelight. The clockwork mechanisms were simply window dressing for an easily impressed audience.

Ueber den Schachspieler des Herrn von Kempelen und dessen Nachbildung by Joseph Racknitz, 1789.

Eraza Collection/Alamy Stock Photo

It’s perhaps fitting that Amazon now runs a platform with the same name that lets companies crowdsource piecemeal online tasks that require human judgment, such as labeling the training data that modern AI systems learn from. After all, charades in the style of the original Mechanical Turk—nominally automated systems that actually rely on human help, or what Jeff Bezos once dubbed “artificial artificial intelligence”—are common features of the modern Web, where an aura of technological sophistication can sometimes be more important than technological sophistication itself.

“The idea of bringing something inanimate to life is an old and seemingly very human yearning,” says David Gunkel, a professor of media studies at Northern Illinois University and author of The Machine Question: Critical Perspectives on AI, Robots and Ethics. Pointing to tales as varied as Mary Shelley’s 1818 novel Frankenstein and the 2014 film Ex Machina, he adds, “It is in these stories and scenarios that we play the role of God by creating new life out of inanimate matter. And it appears that the desire to actualize this outcome is so persistent and inescapable [that] we are willing to cheat and deceive ourselves in order to make it a reality.”

Even before products such as ChatGPT and DALL-E kicked off the current artificial intelligence boom, this dynamic played out with less ambitious AI products. Consider X.ai, a company that once touted an automated personal assistant that could schedule meetings and send out e-mails. It turned out that the reason X.ai’s software seemed so lifelike was that it was literally alive, which Bloomberg reported in 2016: behind the scenes, human trainers were reviewing almost all inbound e-mails. Other concierge and personal assistant programs from that era were similarly human-dependent, and Bloomberg’s report noted that the draw of venture capital may have incentivized start-ups to frame ordinary workflows as cutting-edge.

It’s a dynamic that plays out across our increasingly online life. That food delivery robot carting a salad to your front door might actually be a young video game enthusiast piloting it from afar. You might think a social media algorithm is sifting out pornography from cat memes, when in reality human moderators in an office somewhere are making the toughest calls.

“This is not just a question of marketing appeal,” Raicu says. “It’s also a reflection of the current push to bring things to market before they actually work as intended or advertised. Some companies seem to view the ‘humans inside the machine’ as an interim step while the automation solution improves.”

In recent months the hype around generative AI has created exciting new opportunities for people to mask workaday human labor under a shiny, PR-friendly veneer of fauxtomation. Earlier this year, for instance, the Internet erupted in a furor over a posthumous George Carlin stand-up special that had purportedly simulated the late comic’s sense of humor with a machine-learning program trained on his oeuvre. But later, under the threat of a lawsuit from Carlin’s estate, one of the video’s creators admitted through a spokesperson that the supposedly AI-generated jokes had in fact been written by an ordinary person.

It was the latest in a centuries-old tradition that continues to enchant and ensnare unwary consumers: humans pretending to be machines pretending to be humans once again.